The Energy Anatomy of AI: where the Watts go

This post is the part of Artificial Intelligence value chain series

About this series

I’ve spent the last few months trying to understand how artificial intelligence really works, not the algorithms, but the machinery underneath it: the power, the chips, the data, and the economics that hold it all together.

Most of that information is either scattered, too technical, or locked in research papers.

So I decided to map it myself, one layer at a time.

The Artificial Intelligence Stack series is a 6-layer series that breaks down how the AI value chain actually functions - from the energy that powers it to the strategies that shape it.

Every week, there will be 3 posts targeting each layer from foundations, economics, and participating players perspective

Current post is part of the Energy layer.

Consider subscribing the newsletter for free to receive future posts of this series

Every time I open ChatGPT, it feels like magic.

I type a line, it replies instantly like intelligence just exists in the cloud.

Weightless. Effortless.

But the more I dug into it, the more it started feeling… physical.

Because behind every neat little line of text is a ridiculous amount of electricity.

The internet ran on bandwidth.

AI runs on power.

And that one difference of electrons over algorithms is already changing how countries, companies, and investors think about strategy

So where’s all that power going?

Let’s start from the top.

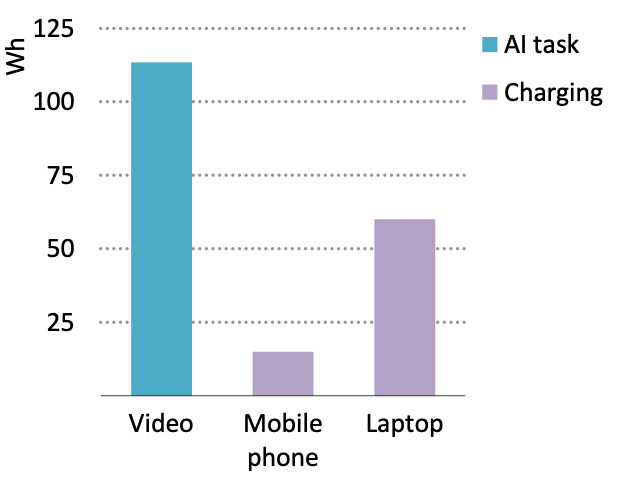

The electricity intensity of different generative AI tasks varies greatly – generating a single short video can be as energy intensive as charging a laptop two times

The energy flow beneath this number is where things get interesting, (and eh a bit technical!)

After spending multiple rounds of reading and drafting to simplify things, this is how I read the electricity flow across the value chain

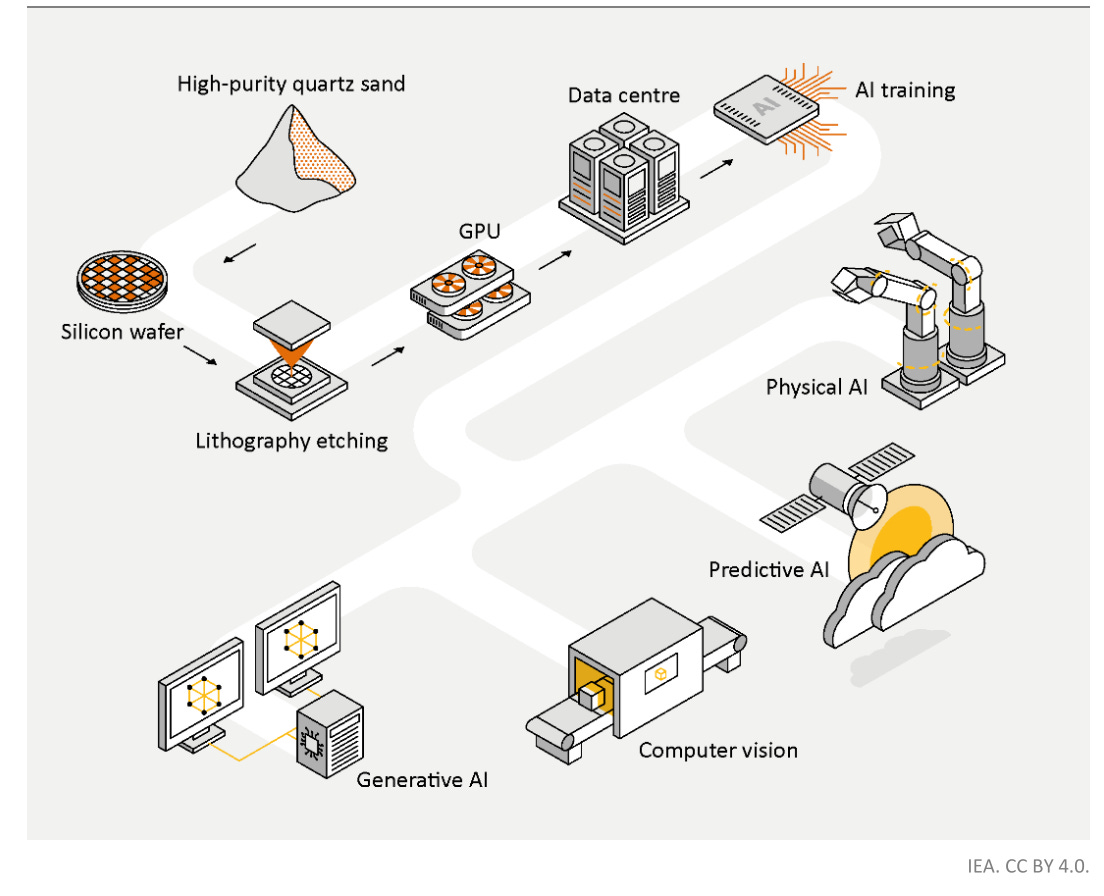

It starts with semiconductors - the physical brains.

Every “intelligent” output, a line of text, a generated image, a voice clone, begins with electricity moving through chips.

And these chips are not your old-school computer processors.

Each high-end chip is carved out of silicon using EUV lithography machines from ASML that cost half a billion dollars each.

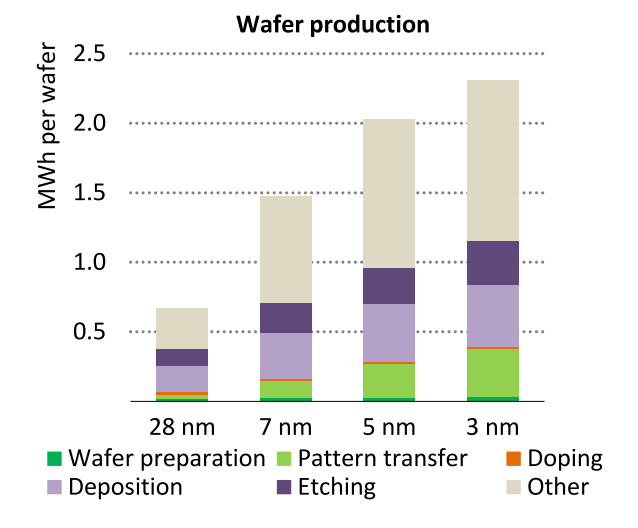

New, more complex chip types require more energy, especially for lithography and deposition, but manufacturing amounts to less than 20% of the total life-cycle demand

Every 3-nanometre wafer takes about 2.3 MWh to make - roughly what an average Indian household uses in four months.

Globally, chip manufacturing eats up 100 TWh of electricity a year.

Taiwan’s TSMC alone accounts for 10% of the island’s total power use.

When you read that “chips are the new oil,” remember: they’re also the new electricity grid.

They’re beasts - GPUs, TPUs, and a growing zoo of custom silicon.

Each one draws 700 to 1,000 watts.

Then comes the data centre stage - the new industrial factory

A regular data centre used to consume 10–25 megawatts.

AI-specialised ones now run 100 MW or more, enough to power a city of 100,000 homes.

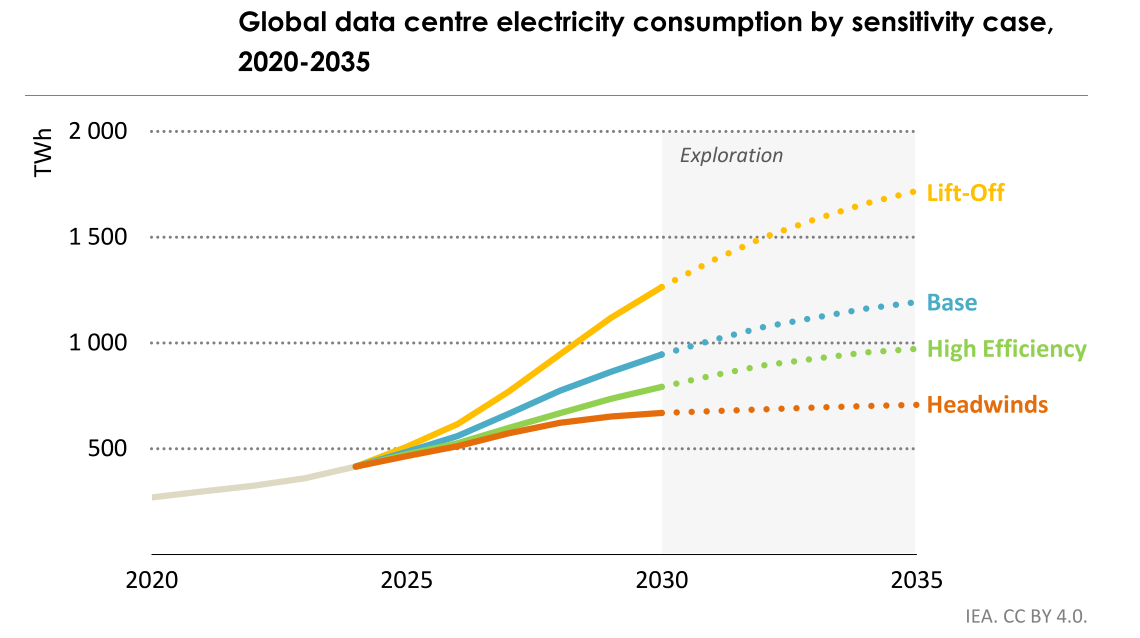

The IEA says data centres account for about 1.5% of the world’s total electricity use today and could hit 3% by 2030.

And within that slice, AI is the fastest-growing load.

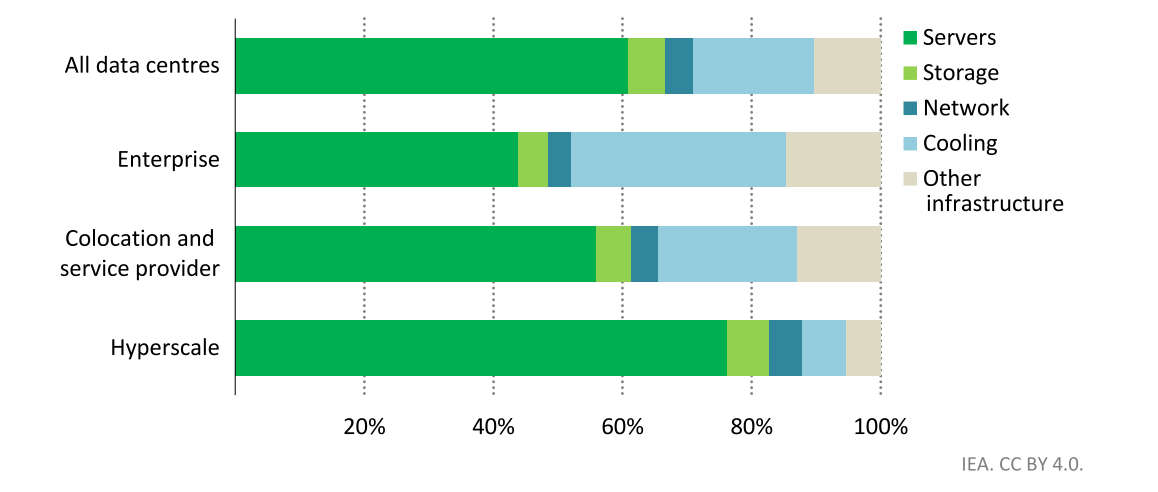

Servers alone consume about 60% of the total electricity, cooling systems about 10–30%, depending on efficiency.

That’s why hyperscalers are shifting to liquid cooling, immersion tanks, and even reusing waste heat for urban heating (Helsinki literally pipes it into homes)

The best-run hyperscale data centres now operate at PUE ~1.15, meaning for every 1 kWh used by the IT hardware, there’s only 0.15 kWh wasted in cooling and lighting.

Still, efficiency isn’t keeping up with growth.

And that’s where the grid begins to choke.

In Virginia, data centres already use 25% of the state’s electricity.

In Ireland, it’s 20%. Regulators have literally started rejecting new build requests because the grid can’t keep up.AI is starting to compete with households and hospitals for electrons

Next comes the Training stage

We have seen each GPU draws 700W of energy.

Now multiply that by 25,000 (that’s roughly what GPT-4 used during training), and you’re looking at a 22-megawatt setup - the same as powering 150 electric-vehicle charging stations running at full tilt.

That’s just for one model. One training run.

For complete training of GPT-4, OpenAI ran this for 14 weeks straight.

That’s enough to power 70,000 Indian homes!!!

This made me wonder “thinking” part of AI already uses more power than the “living” part of many cities.

Wait, can’t we just add more renewables?

Yes and no.

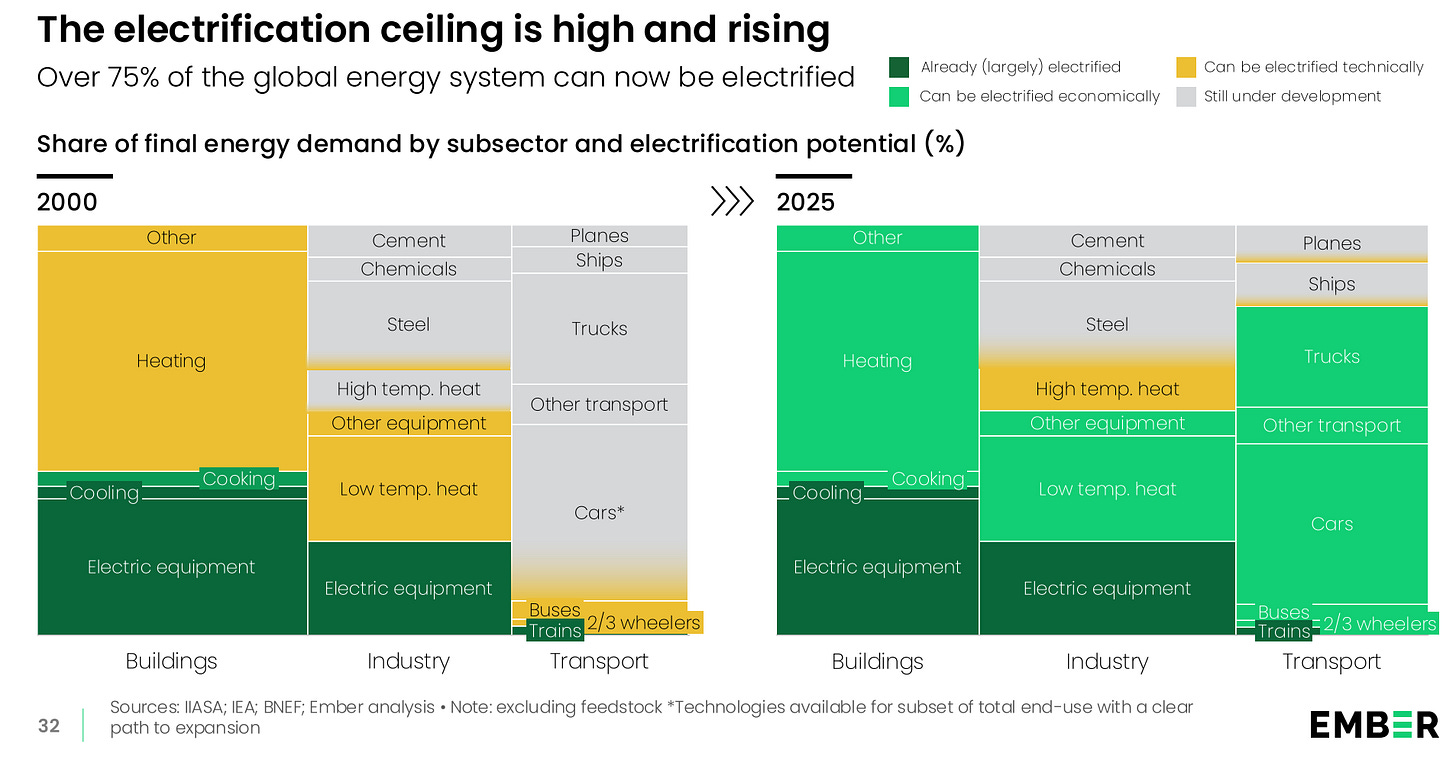

When we look at the shift happening in the sources of energy, things start looking promising, where -

After decades of cost innovation electrotech is now cheaper than fossils

Key electrotech technologies have enjoyed exponential growth.

We see this for generation (solar and wind), connections (batteries and software) and usage (EV and heat pumps).Over 75% of the global energy system can now be electrified

Most hyperscalers - Google, Microsoft, Amazon - have signed renewable PPAs (power purchase agreements).

But these aren’t always physical contracts; many are financial, meaning they offset emissions on paper while still drawing real-time power from natural gas or coal.

The smarter play now is what I call Energy Vertical Integration 2.0 - tech companies becoming their own utilities.

Microsoft is restarting the Three Mile Island nuclear plant via a 20-year deal with Constellation.

Google invested in Fervo Energy’s geothermal project in Nevada.

Amazon is locking in 960 MW of nuclear supply with Talen Energy.

Meta signed 150 MW of geothermal capacity with Sage Geosystems.

This isn’t about ESG virtue-signalling anymore. It’s a cost and reliability hedge.

Electricity has become a strategic moat.

So, can we use Renewable sources of energy for AI?

Yes, because renewables are already the cheapest new source of energy in most markets.

Every major hyperscaler has signed renewable PPAs — Microsoft, Google, and Meta are locking 10–20 year deals for solar, wind, and now geothermal.

But here’s the “No” part that almost no one outside the industry talks about:

Renewables don’t run on your training schedule.AI workloads, especially training demand round-the-clock, high-density, uninterrupted power.

Solar drops to zero at night. Wind fluctuates by region and seasonAnd storing that energy at the scale of 100 MW data centres is still uneconomic and technically hard — batteries can cover minutes or hours, not days or weeks.

In practice, that means even “renewable-powered” data centres often pull backup electricity from fossil grids when renewable generation dipsGoogle’s own reporting shows that most corporate PPAs are “financial”, not physical, meaning they offset emissions on paper but still rely on coal or gas in real-time operations.

So while you might see headlines like “Google runs on 100% renewable energy,” what it really means is they’ve purchased enough renewable credits to match their consumption, not synchronize with it.

True “clean” AI would require hourly matching — using dispatchable clean power sources like geothermal, hydro, or next-gen nuclear that can run 24/7.

That’s exactly why Microsoft signed a deal to restart the Three Mile Island nuclear plant and Google is investing in Fervo Energy’s geothermal wells.So yes, renewables are part of the solution.

But no — they can’t run a hyperscale data centre by themselves.

At least, not yet.

At the national level, this gets even more interesting.

China runs an integrated stack: coal for base load, hydro for renewables, and SMRs (small modular reactors) for the next decade.

It’s the only country treating compute like national steel capacity.

The U.S. still dominates the data-centre footprint, but grid congestion in Virginia and Texas is pushing growth into the Midwest (cheap wind) and Pacific Northwest (hydro).

Europe is struggling with high costs and grid limits - expect migration north to Sweden, Norway, and Finland, where hydro and cold weather help cooling.

Japan quietly restarted nuclear plants, a big deal because its data-centre growth now follows those revived grids.

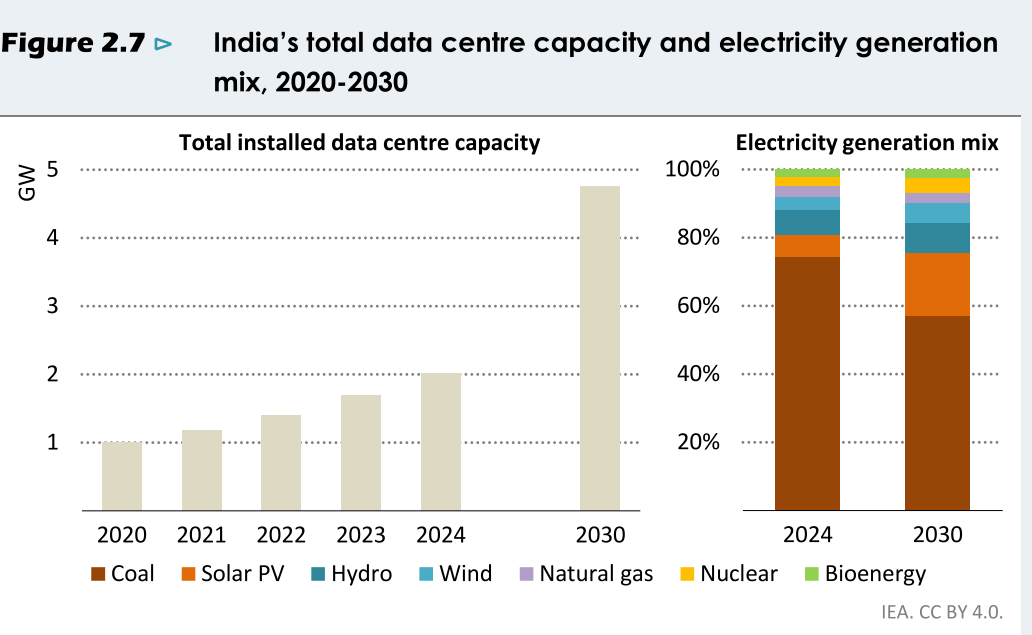

India, meanwhile, is on a tear.

Current capacity: 2 GW, heading to 5 GW by 2030.

Coal still fuels 74% of the grid, but data-centre players like Bharti Airtel Nxtra, AdaniConneX, and Yotta are signing renewable PPAs.

The government’s IndiaAI Mission aims to deploy 18,000 GPUs, backed by grid upgrades and state incentives.

India’s story is fascinating: while the West worries about grid limits, India’s problem is the opposite - building enough of it, fast enough.

And here’s the India/APAC twist

Most people still think of India as a back-office for data.

But if you look closely, the next decade could see it emerge as an energy-compute corridor - a place where renewable abundance meets AI demand.

Companies like ReNew, Greenko, JSW Energy, and Tata Power Renewable are already aligning capacity around data-centre hubs.

Pair that with fibre connectivity, stable regulation, and growing local AI workloads and India starts to look like the APAC version of northern Virginia circa 2015.

This is where local investors and policymakers need to zoom in: the “AI boom” is really an energy-infrastructure boom in disguise.

The Next 12–18 Months

If we follow the curve, five things are about to happen fast:

Grid bottlenecks push data centres to places with surplus power - northern Canada, southern India, Iceland, Gulf states. (The new global game: compute arbitrage.)

Private microgrids rise. Hyperscalers will co-locate SMRs (small modular reactors) and geothermal projects with campuses.

Efficiency flattens. Moore’s Law slows; watt-per-operation gains plateau.

Policy wakes up. Expect “fast-track zones” for data-energy infra builds.

Capital rotates. Investors move from chips to energy tech - battery storage, cooling, SMRs, grid management.

A perspective I didn’t expect

When you peel away the hype, AI isn’t some ethereal magic trick.

It’s a giant industrial process - one that starts with minerals and ends with megawatts.

In the 1800s, steel reshaped cities.

In the 1900s, oil powered global trade.

In the 2000s, data reshaped communication.

Now, in the 2020s, electricity is quietly becoming the substrate of intelligence.

The more intelligent our systems get, the more they pull us back to the basics: energy, efficiency, and infrastructure.

Excellent analysis, this piece truely brings to light the underlying physical reality of AI, which often feels like weightless magic, and makes one wonder about the immense energy footprint we are building.